Welcome to the Website of the CEAP-360VR Dataset

Watching 360° videos using Virtual Reality (VR) head-mounted displays (HMDs) provides interactive and immersive experiences, where videos can evoke different emotions. Existing emotion self-report techniques within VR however are either retrospective or interrupt the immersive experience.

To address this, we introduce the Continuous Physiological and Behavioral Emotion Annotation Dataset for 360° Videos (CEAP-360VR). We conducted a controlled user study with 32 participants where each watched eight one-minute 360° video clips, and publicly make available the CEAP-360VR Dataset. Accordingly, this dataset contains:

- questionnaires (SSQ, IPQ, NASA-TLX);

- continuous valence-arousal annotations;

- head and eye movements as well as left and right eye pupil diameters while watching videos;

- peripheral physiological responses (ACC, EDA, SKT, BVP, HR, IBI).

Our dataset includes the data pre-processing, data validating scripts, along with dataset description and key steps in the stage of data acquisition and pre-processing.

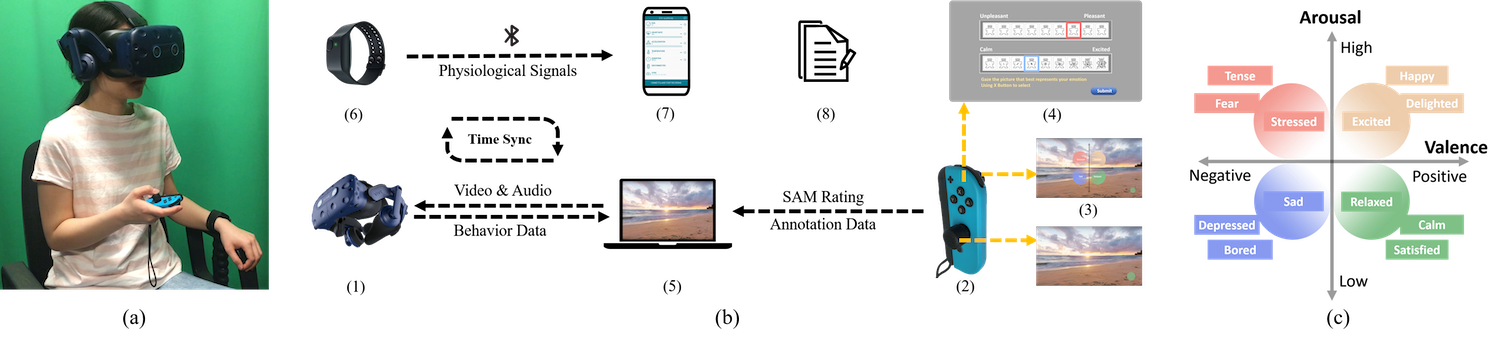

(a) A participant in our experiment watching a 360° video using the HTC VIVE Pro Eye HMD and annotating her emotional state using a Joy-Con controller, while wearing an Empatica E4 Wristband. (b) The system schematic shows various aspects of the experiment set-up and data acquisition. (c) Valence-Arousal model space based on Russell's Circumplex model.

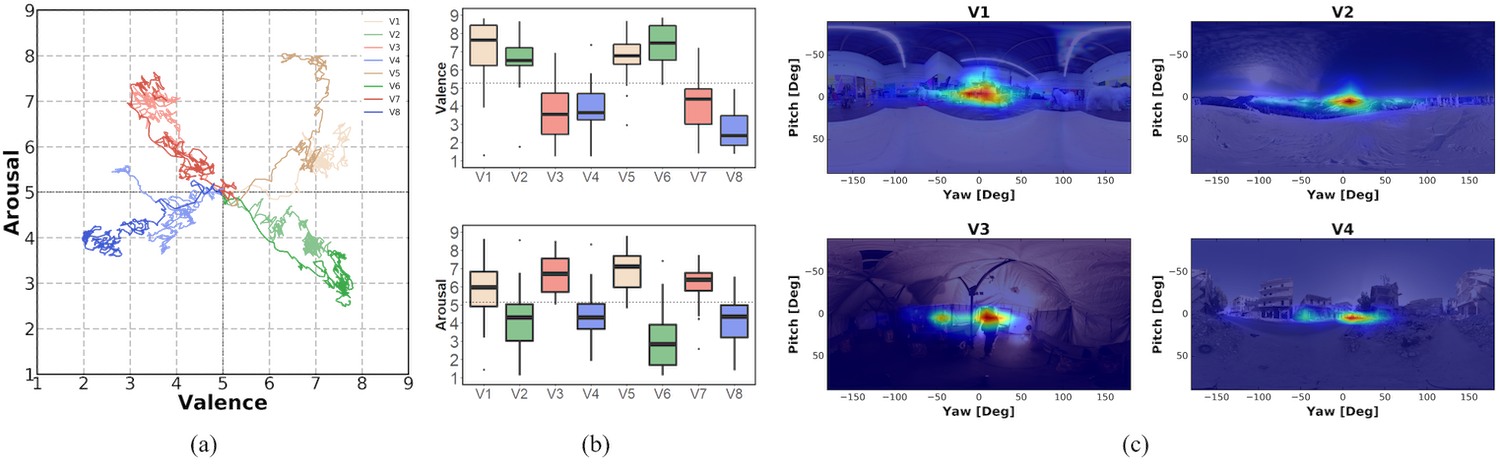

(a) Combined annotation trajectories for eight selected videos from 32 participants. (b) Boxplots for mean ratings of valence and arousal. (c) A sample thumbnail frame with its saliency map for V1-V4 from 32 participants