Reference

Distorted

Front View

Back View

Introduction

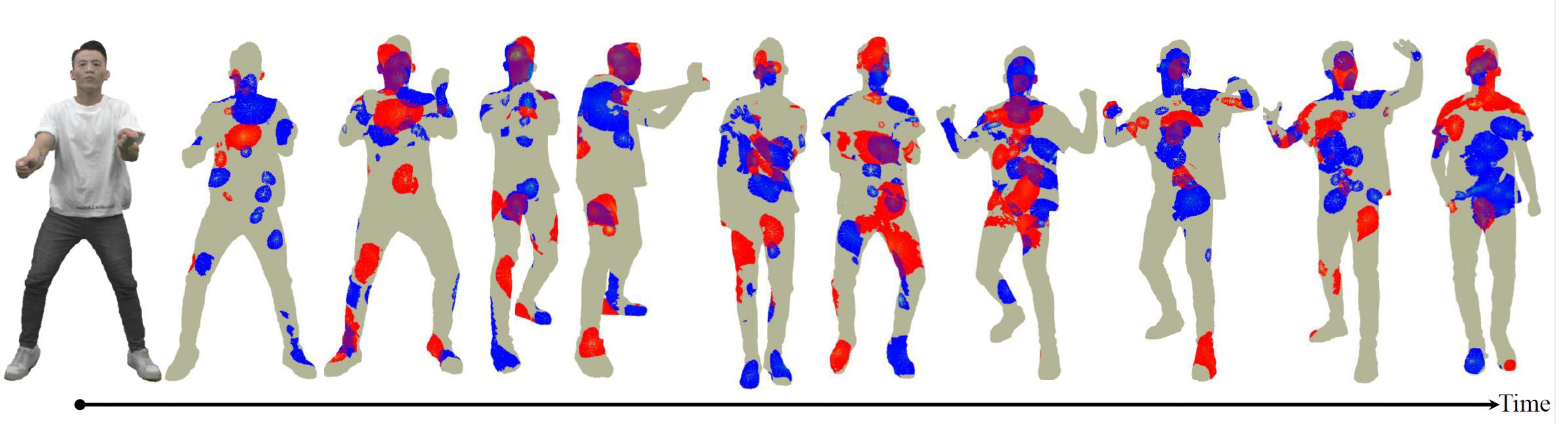

This research introduces two complementary eye-tracking datasets for studying visual attention in Dynamic Point Clouds (DPC) within Virtual Reality environments. We compare task-free viewing (24 participants, 19 DPCs) with task-dependent quality assessment (40 participants, 5 reference DPCs with multiple distortions). Our analysis reveals significant differences in visual attention between these paradigms, measured using Pearson correlation and an adapted Earth Mover’s Distance metric. This work establishes a crucial connection between quality assessment and visual attention in DPCs, providing valuable benchmark data for improving VR experiences and visual saliency models.

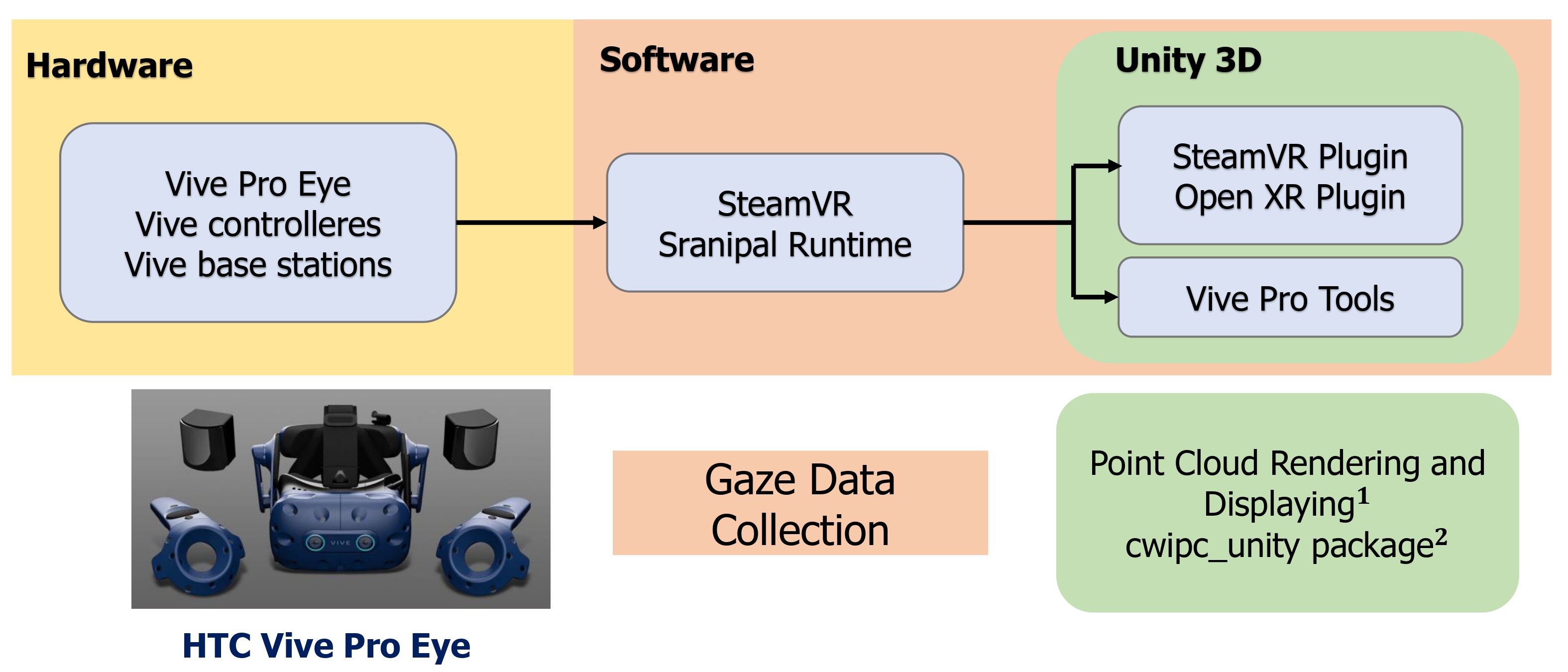

Apparatus

Experimental Design

Data Processing

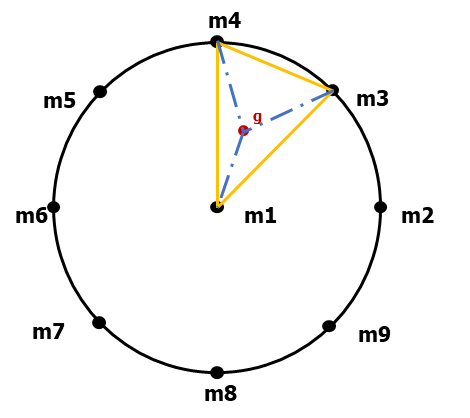

Data Collection: Angular Error Estimation

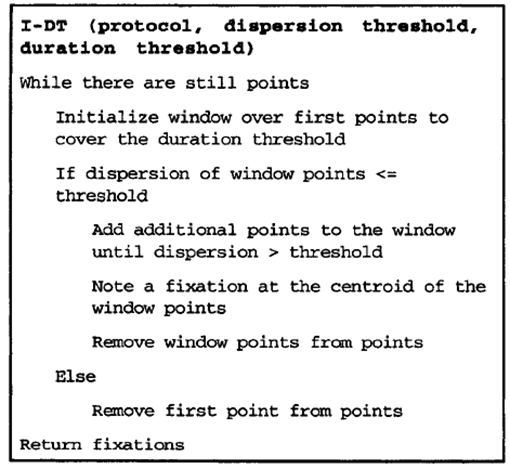

Gaze Point Identification

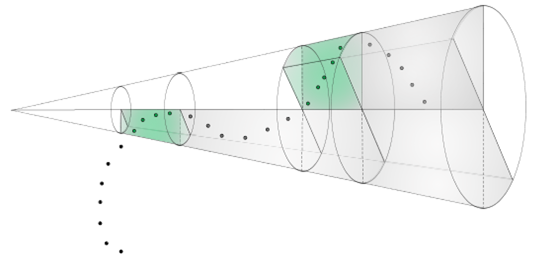

Truncated Cone-Sector

Each stage of our data processing pipeline is carefully designed to maintain data integrity while extracting meaningful insights about visual attention patterns in dynamic point cloud environments.

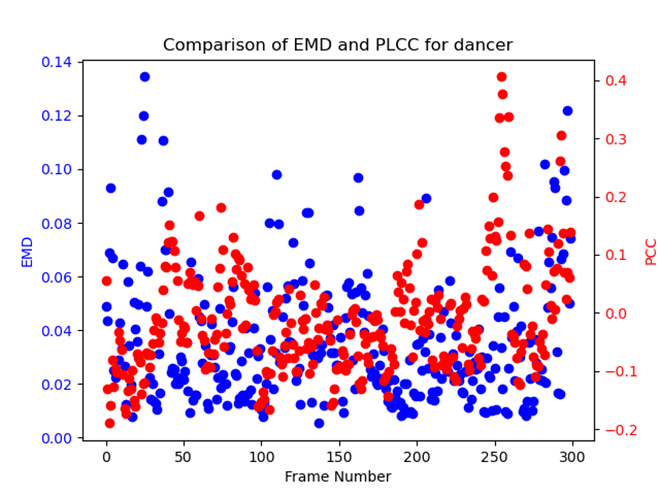

Comparison

Task-dependent

Results

Comparison Consistency of Visual Saliency Map Higher value means two saliency maps are more similar

Publications

-

QAVA-DPC: Eye-Tracking Based Quality Assessment and Visual Attention Dataset for Dynamic Point Cloud in 6 DoF.

In IEEE International Symposium on Mixed and Augmented Reality (ISMAR),

2023.

-

Comparison of Visual Saliency for Dynamic Point Clouds: Task-free vs. Task-dependent.

IEEE Transactions on Visualization and Computer Graphics,

31(5): pp. 2964 - 2974,

2025.

PDF

Github Repository

-

Visual-Saliency Guided Multi-modal Learning for No Reference Point Cloud Quality Assessment.

In Proceedings of the 3rd Workshop on Quality of Experience in Visual Multimedia Applications,

2024.

-

Compeq-mr: Compressed Point Cloud Dataset with Eye Tracking and Quality Assessment in Mixed Reality.

In Proceedings of the 15th ACM Multimedia Systems Conference,

2024.

Datasets

-

Dynamic Point Cloud Quality Score & Eye-tracking Dataset (QAVQ-DPC) - Data from the 1st task-dependent user study

LinkDataset -

Dynamic Point Cloud Eye-tracking Dataset - Data from the 2nd task-free user study

LinkDataset

Contact

For questions about this research, please contact Xuemei Zhou (xuemei.zhou [at] cwi.nl)